A Texture Superpixel Approach to Semantic Material Classification for Acoustic Geometry Tagging

Published in CHI Conference on Human Factors in Computing Systems, 2021

Recommended citation: Colombo, M., Dolhasz, A. and Harvey, C., 2021, May. A texture superpixel approach to semantic material classification for acoustic geometry tagging. In Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems (pp. 1-7). https://dl.acm.org/doi/abs/10.1145/3411763.3451657?casa_token=a1DLazhLXDYAAAAA:Ua0q94a0bikPZoeVdyfdcLKuazS4H4ET9Tl-pEXV0IVoA12vvQNslYl-cRJREQE_PQoUEVvLcSG-

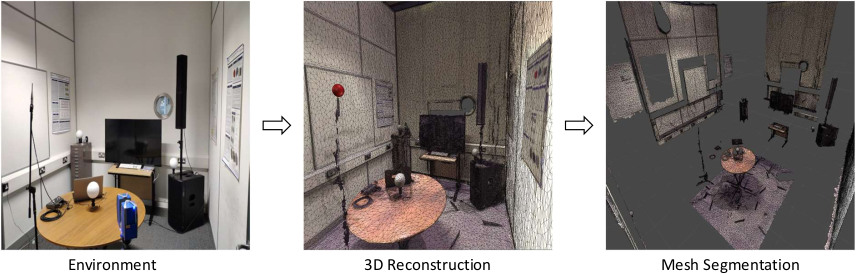

The current state of audio rendering algorithms allows efficient sound propagation, reflecting realistic acoustic properties of real environments. Among factors affecting realism of acoustic simulations is the mapping between an environment’s geometry, and acoustic information of materials represented. We present a pipeline to infer material characteristics from their visual representations, providing an automated mapping. A trained image classifier estimates semantic material information from textured meshes mapping predicted labels to a database of measured frequency-dependent absorption coefficients; trained on a material image patches generated from superpixels, it produces inference from meshes, decomposing their unwrapped textures. The most frequent label from predicted texture patches determines the acoustic material assigned to the input mesh. We test the pipeline on a real environment, capturing a conference room and reconstructing its geometry from point cloud data. We estimate a Room Impulse Response (RIR) of the virtual environment, which we compare against a measured counterpart.